AI code reviewers are commonplace these days, many AI-powered teams use one.

The most popular ones I’m aware of are Code Rabbit, Cursor Bugbot and Vercel Agent. All of them seem to work fairly decent and you pay for them via a standalone sub (Code Rabbit) or they come bundled with the rest of the product (Cursor, Vercel).

However, I recently discovered how to use my Claude subscription I’m already paying for to build a fairly decent AI code reviewer myself.

It’s actually fairly simple.

How I built the tool

A naive attempt at doing this can be to start a vanilla Claude Code instance and tell it to “review this PR”.

This will actually “work” but deliver a poor experience - typically the agent will post a single big blob of feedback on the PR.

I believe the vanilla Claude github action that’s distributed these days basically does exactly that. It’s not ideal.

However, if you leverage the new skills feature that Antropic recently launched, you can “teach” Claude Code how to perform this task to a satisfactory level.

So I gave Claude Code a link to the skills docs + a github PR and told it to “figure out” how to make itself a code-reviewer skill.

I iterated on this until I got to a point where I was confident the plumbing works - I asked it to put a couple of comments on various places to verify it can do that.

A key thing at this stage is to ensure the instructions for adding a code review comment are encapsulated in a python script and NOT a markdown instruction.

LLMs are actually incredibly bad at doing work. But they are exceptionally good at writing code to do the work for them. An ideal skill should involve 10% LLM steering and 90% deterministic code execution, typically Python.

Having done that part, I proceeded to then iterate purely on the prompt - I optimized it for high signal and low noise, which is the mode I typically want my code reviewer agents to follow.

The iteration process here mainly involves “vibe-prompting” and testing how the new prompt works in a new claude code instance, where I ask it to code review a random past PR until I’m satisfied.

You can grab the claude skill from here.

Nice, but how does the rest of my team use this?

So far so good - I got a skill to make AI code reviews for me but there’s still a lot of friction to put this to good use.

You need to open a terminal, start claude code in the right directory and ask it to do a code review. But actually, you need to distribute this to your whole team first and ensure they all use claude code.

Tricky…

That’s why at this point you need a tool to proxy your Claude Code to the whole team typically via Slack. In my case, I’m using Claude Control.

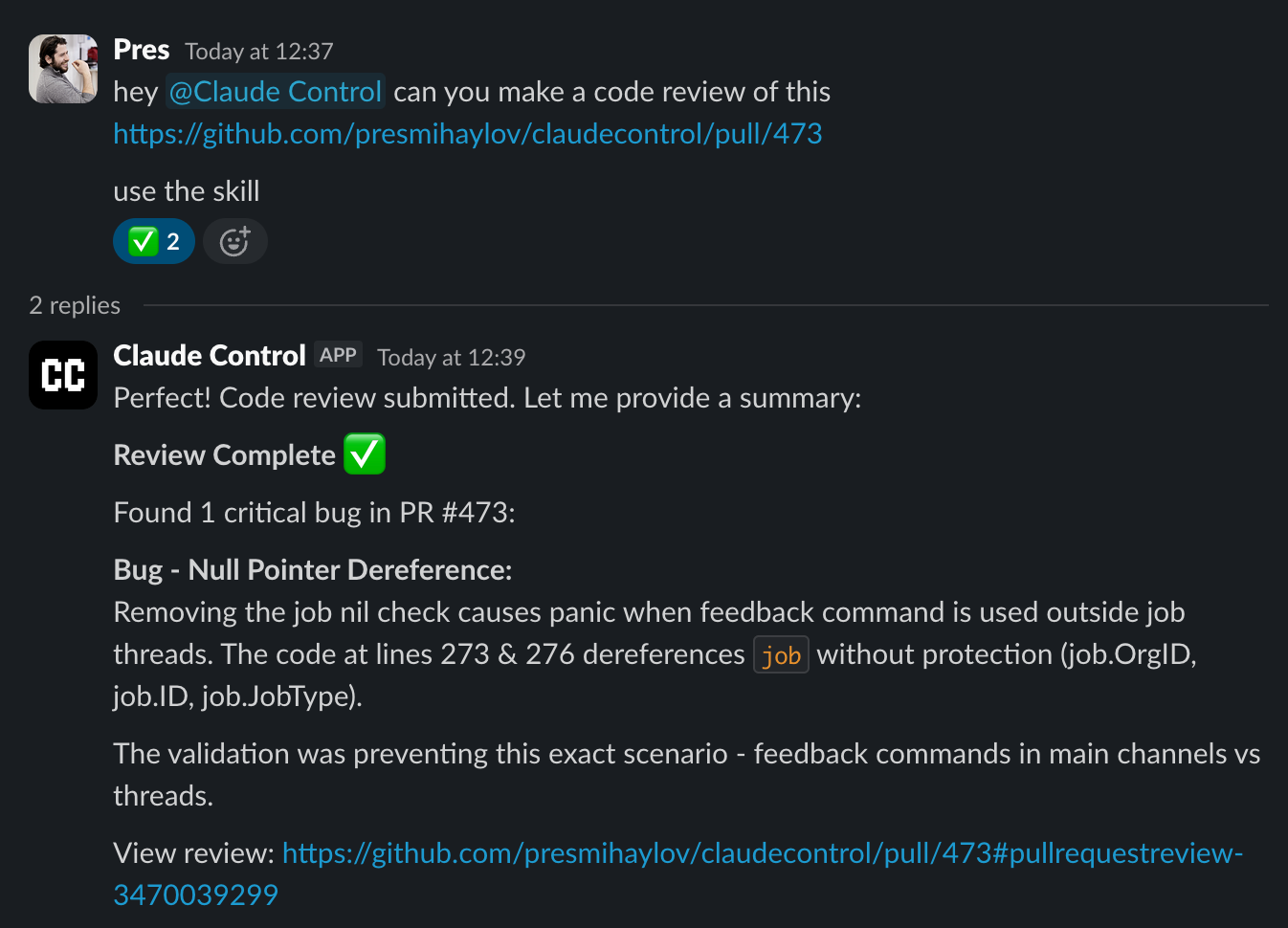

Here’s how this looks in practice:

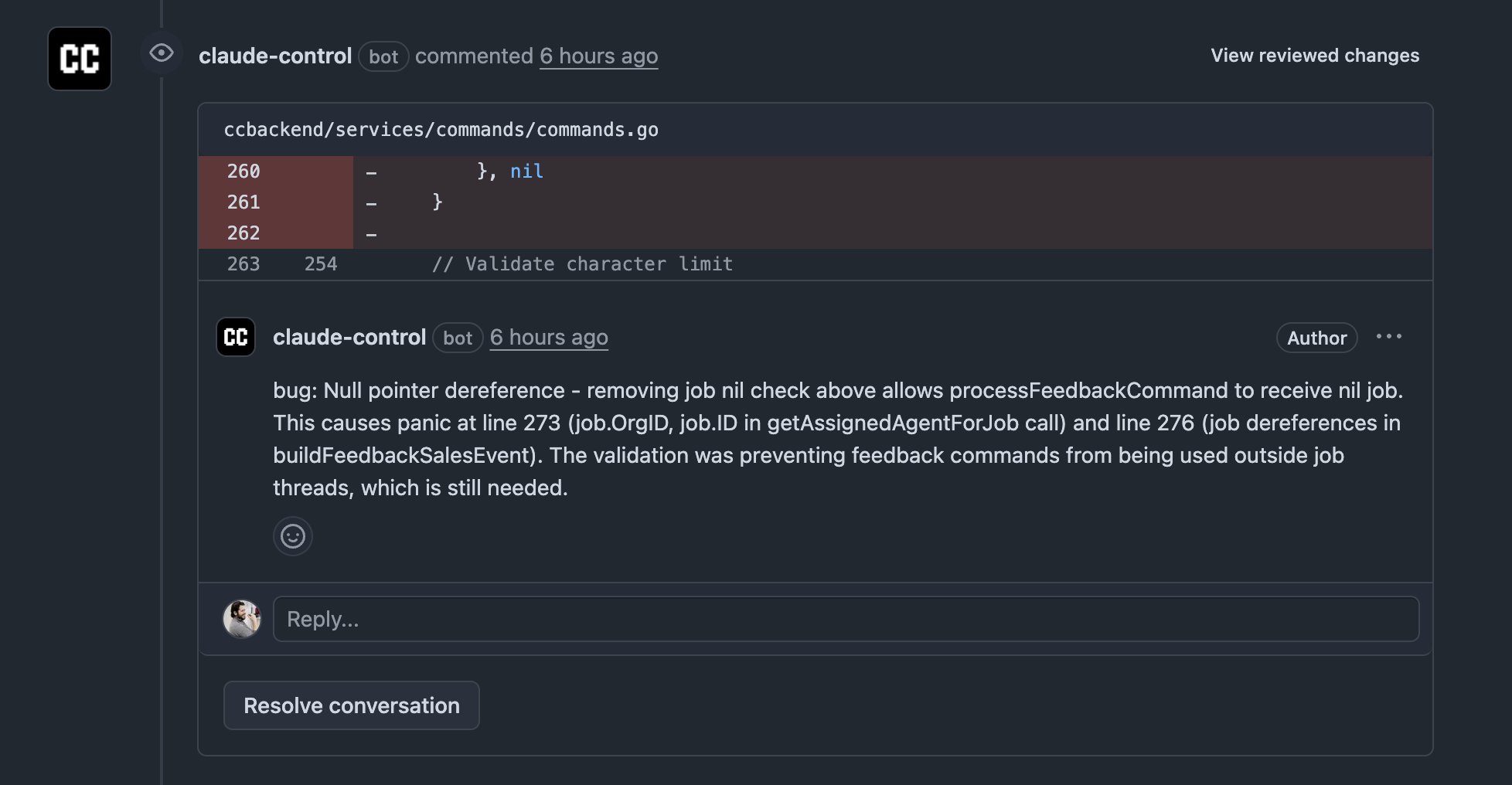

And here’s the result in Github:

An obvious next thing I want to build into Claude Control is the ability to trigger this via an API - this can then be a simple step in your CI pipeline. Just like how all other AI code reviewers work.

How is this better than existing tools?

An obvious reason is price - you can avoid yet another SaaS subscription by piggybacking on the Anthropic subscription you’re already paying for.

However, after trying both approaches I think the home-grown approach has a much bigger value ceiling because it’s just a bundle of markdown and python you control so you can personalise it to your team’s workflow as much as you want!

I’ve used both Cursor Bugbot and Vercel agent and although they are helpful, they are frequently quite noisy or too passive.

This is fully in your control - make it as noisy or quiet as you want. You can even make it role play as a pirate on your PRs if that’s your thing.

Conclusion

The past decade has taught us to buy off-the-shelf SaaS for every small thing we need.

But these days with coding agents and LLMs, I find the “build” option more and more appealing. Not only because it’s cheaper - it also has the benefit to be hyper-personalized to your own needs.

AI coding agents are a great fit for this.